Deep Learning can be classified as the subset of Machine Learning which operates on neural network function inspired by human brain anatomy. It learns how humans learn i.e. through examples. It can learn from millions of unstructured and uncorrelated data which otherwise humans would take decades to learn. It can also be termed as a deep neural network. Companies have already started realizing the potential wealth of tapping this vast set of information for automating their processes and thus the demand for using deep learning or deep neural networks have ever been increasing.

Working of Deep Learning

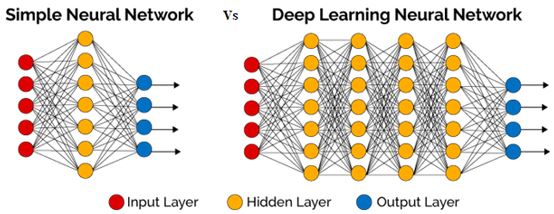

Deep Learning methodologies are based and inspired by the human brain like a network which is known as neural network. In fact, a neural network might have only two or three abstract layers but in deep learning, there may be even 150 or more. It learns from labelled data and neural network architectures where it can extract the features directly from the data without any human intervention. Convolutional neural networks (CNN or ConvNet) are some examples of deep learning networks. Manual feature extraction can be eliminated using deep learning models. The ability of deep learning algorithms to automatically extract feature makes it more accurate.

How to train your Deep Learning models?

Training from scratch – This process aims to collect a large number of a labeled dataset and build a network architecture which can learn the features from the model.

Transfer Learning – This approach is most widely used in data learning applications. It works on finetuning a pre-trained model. A successful existing network is chosen and is built upon it. It has an advantage over the first approach as it needs very fewer data and hence computational time considerably drops.

Feature Extraction – All the abstract hidden layers in a deep neural network learn certain features from images. These features can be pulled out from the network and can be used as input to any machine learning model.

Accelerating Deep Learning with GPUs

Using a General Processor can speed up the computing time significantly. It can considerably reduce the time to train a network from days to some hours.

Algorithms used in Deep Learning

• Multilayer Perception Neural Network (MLPNN) – It is composed of multiple perceptions and uses supervised learning algorithm with a minimum of two abstract layers to generate outputs from a given set of inputs. Multiple layers of neurons are connected in a directed graph such that the signal flows through the nodes in a single particular direction. This type of model can be trained to learn the correlation between the input data set and output vectors. It is used in applications like image verification and reconstruction, speech recognition, machine translation, data classification etc.

• Backpropagation – This is the foundation of neural network training. In supervised learning, a backtracking relation is computed from output to input. This is called backpropagation.

• Convolutional Neural Network (CNN) – It is also a feed-forward neural architecture and uses multiple layers. It uses perceptions for supervised learning and analyzes the given set of data.

• Recurrent Neural Network – The recurrent neural network recognizes the sequential attribute in a data set and predicts the next likely scenario. It is most effective in processing sequential data like sound, time-series data and natural language. It does not have any relation between the input and output variables. It preserves sequential information from previous layers. The layers are merged together to form the recurrent layer. It can be used for sentiment classification, image captioning, speech recognition, Natural Language Processing, etc.

• Long short-term Memory (LSTM) – It is a type of recurrent neural network which allows patterns to be stored in memory for longer periods. They have the ability to recall or delete the data from the memory. It is best suited for time series best data providing optimal solutions for diverse problems. It is based on backpropagation technique but learns sequence data using memory blocks connected in layers instead of neural architecture. It is used for applications such as Natural Language translation and modelling, stocks and trade analysis, sentiment analysis, etc.

• Generative Adversarial Network (GAN) – It is used for unsupervised learning. In a training pattern for a model, the network can automatically discover patterns in the input data set and hence can self-learn to generate new data. Generative Adversarial Network consists of two nets which are pitted one against the other and hence the term adversarial. It uses two sub-models – generator and discriminator. The generator can generate new data while the discriminator can distinguish fake data. GAN algorithms can be used for cyber security, healthcare, Natural Language Processing, speech processing, etc.

• Restricted Boltzmann Machine (RBM) – It is a stochastic neural network or a probabilistic graphical model. It performs filtering and binary factor analysis with constrained communication within the layers. It has one layer of visible and one layer of hidden units. A bias unit is connected to all visible and hidden units. It is an energy-based model. It can be used for both probabilistic and non-probabilistic statistical models. It can be used for feature learning, topic modelling, dimensionality reduction, filtering, etc.

• Deep Belief Network (DBN) – It is based on unsupervised learning. The network has a generative learning model. It has both directed and undirected graphs, with the top layer as undirected and the lower layers are directed downwards. It is based on the “greedy” algorithm and consists of multiple layers of hidden units which are connected together. It is also energy-based learning and can benefit from an unlabelled set of information. These are useful for Image recognition, video- sequence recognition, face recognition, motion capture, etc.

Applications of deep learning

• Autonomous vehicles – A huge amount of data is collected from the sensors mounted all over the surface of the car and a model is built. The model is trained using a suitable deep learning algorithm and then tested for its response in a safe environment. Automatic lane detection, speed adjustment on highways and traffic, obstacle detection, air bag application – is all made possible by the rigorous training of the deep training algorithms.

• Fraud news detection – Deep learning can customize news feed according to the readers. The Internet has become a primary source of all genuine and fake news. Deep learning can develop classifiers that can distinguish between fake and real and fake news and remove fake news from your news feed.

• Natural Language Processing (NLP) – As a subset of Machine Learning, it helps in analyzing and processing natural languages. Language modelling, twitter analysis, classifying texts or sentiment analysis come into the bigger umbrella of NLP, which uses and deploys deep learning algorithms.

• Virtual Assistants – Siri, Alexa, Cortona or Google assistant are all applications of deep learning. These assistants can learn more about the user each time they interact with them. They learn to execute by processing the natural language of the user.

• Entertainment – Netflix, Hotstar, Amazon prime and other entertainment channels use deep learning to pop up your next watch related to your past viewing experiences.

• Visual recognition – Deep learning can sort images based on the location detected in the photographs, faces, or according to events or dates. Large scale image virtual recognition processed by deep learning algorithms is now becoming an essential feature of smartphones and smart devices.

Author

Anupama kumari

M.Tech (VLSI Design and Embedded system)

BS Abdur Rahman University